When Vision entered the Yard

Gotilo Container (JACT) | Mundra Empty Yard & CFS

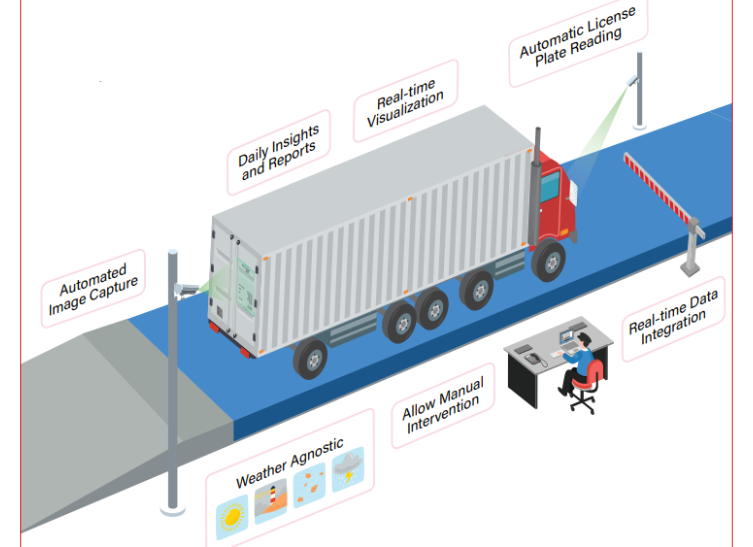

At Mundra Empty Yard & CFS, Gotilo Container (JACT) A Smart Cargo & Gate Automation System was deployed. It automates container identification, inspection and yard movement through Vision AI.

Across a 25,000+ container yard, JACT brought order to one of logistics’ most time-critical environments. Manual entry and inspection gave way to automated flow. Visibility replaced assumption. Turnaround time improved without added complexity.

Objective

In large container yards, delays often stem from repetitive manual checks, fragmented data and limited real-time visibility.

The objective of deploying JACT was clear:

- Eliminate manual entry and inspection at the gate

- Reduce vehicle and container turnaround time (TAT)

- Strengthen compliance, audit readiness and operational accuracy

To achieve this, JACT was implemented across two operational pillars: Gate Automation and Yard Operations Monitoring.

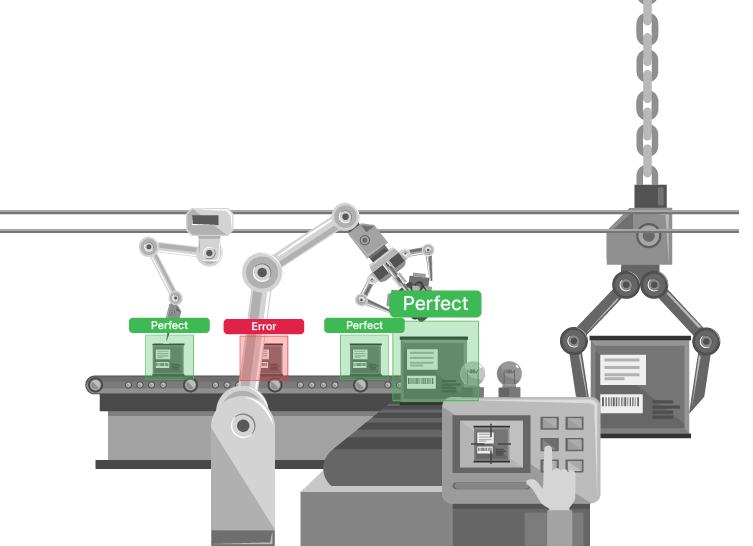

Game Automation Module

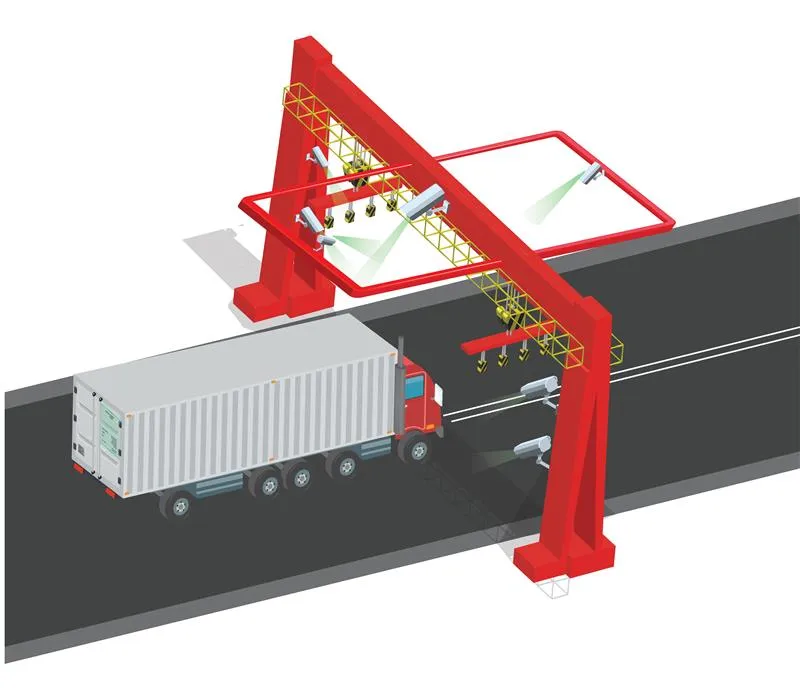

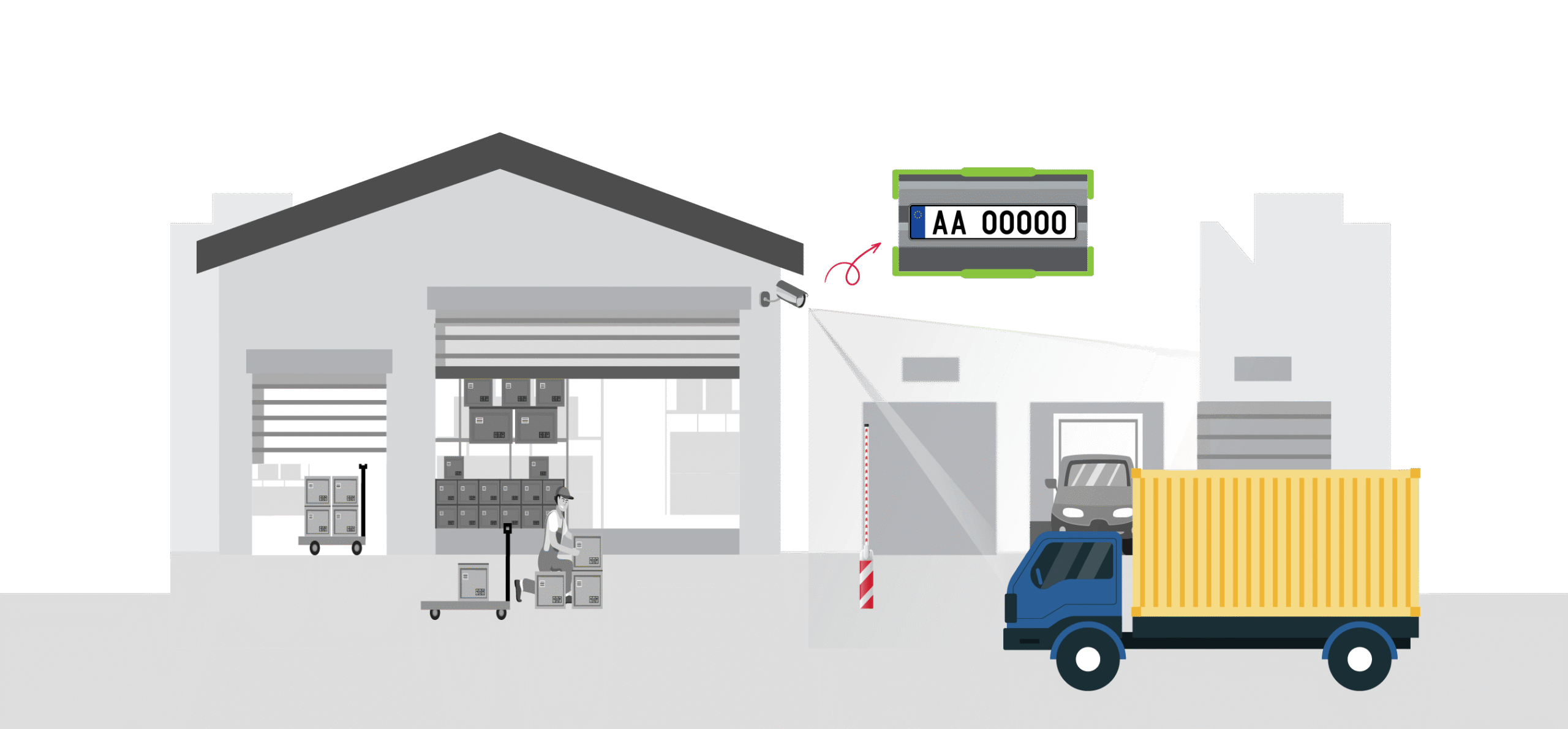

1. Automated OCR-based Container Identification

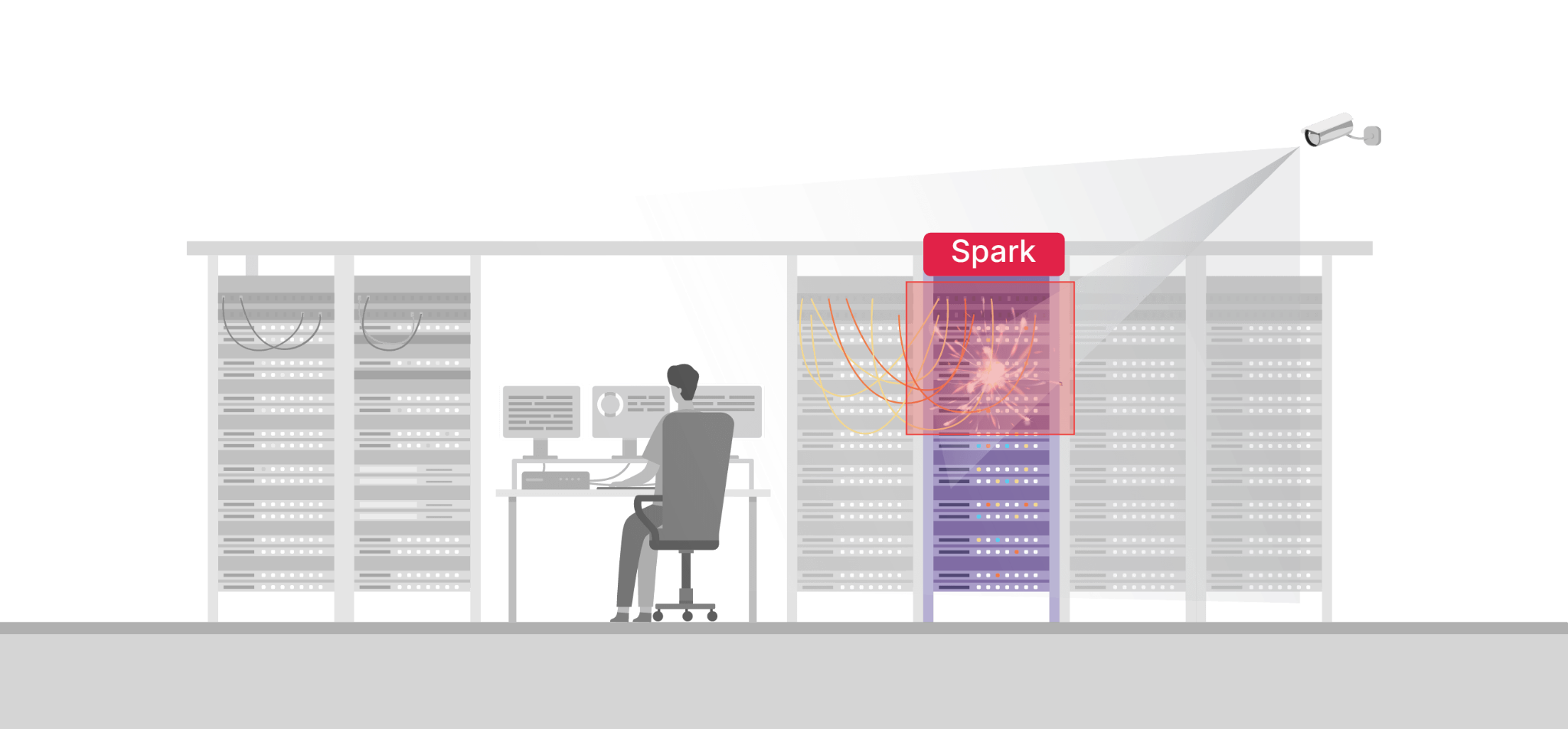

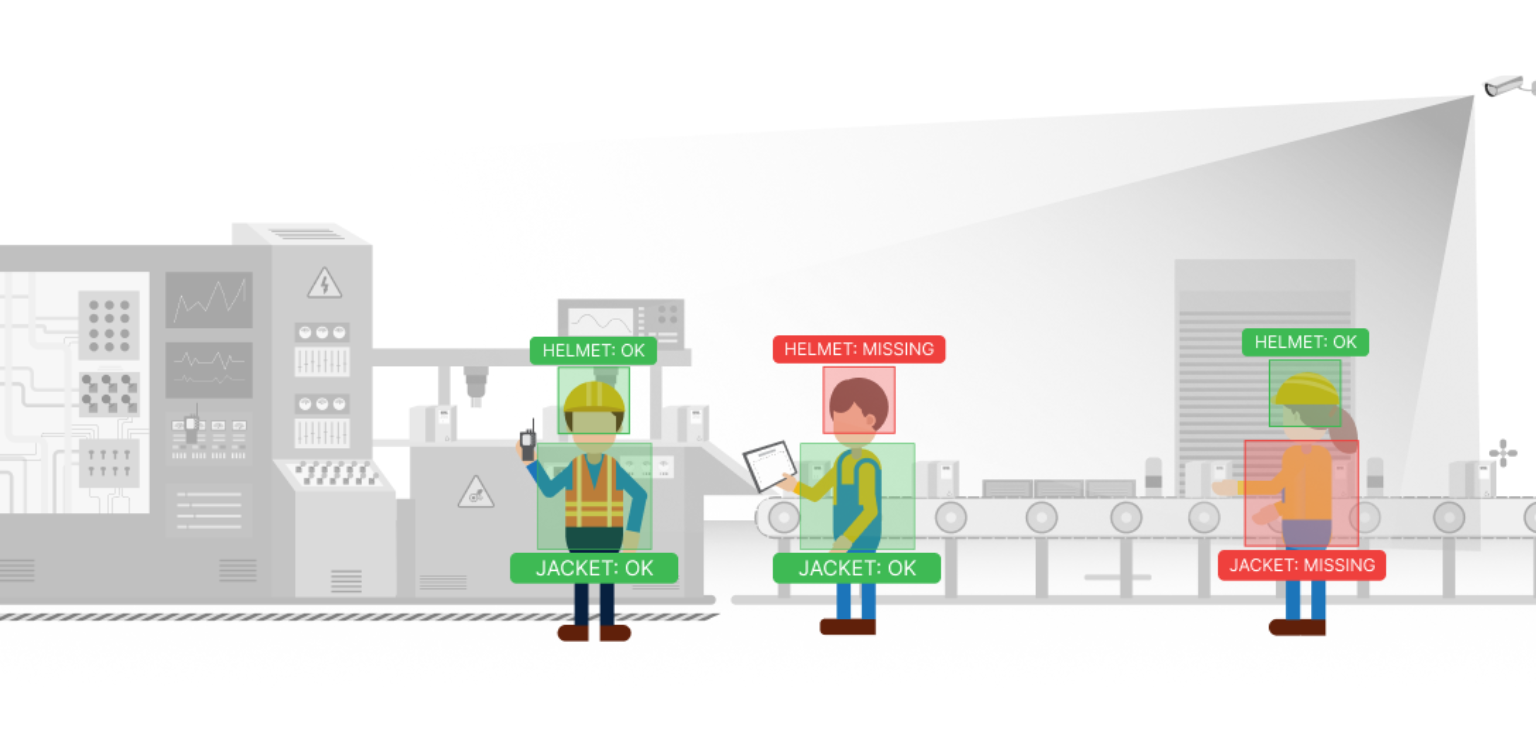

JACT uses Vision AI at the gate to automatically capture Container ID, ISO codes, weight, tare, payload, and seal presence. Data syncs in real time to the Gotilo AI dashboard and integrates seamlessly with TOS and YMS via secure APIs.

- Benefits: No manual entry | Reduced wait time | Compliance & audit readiness

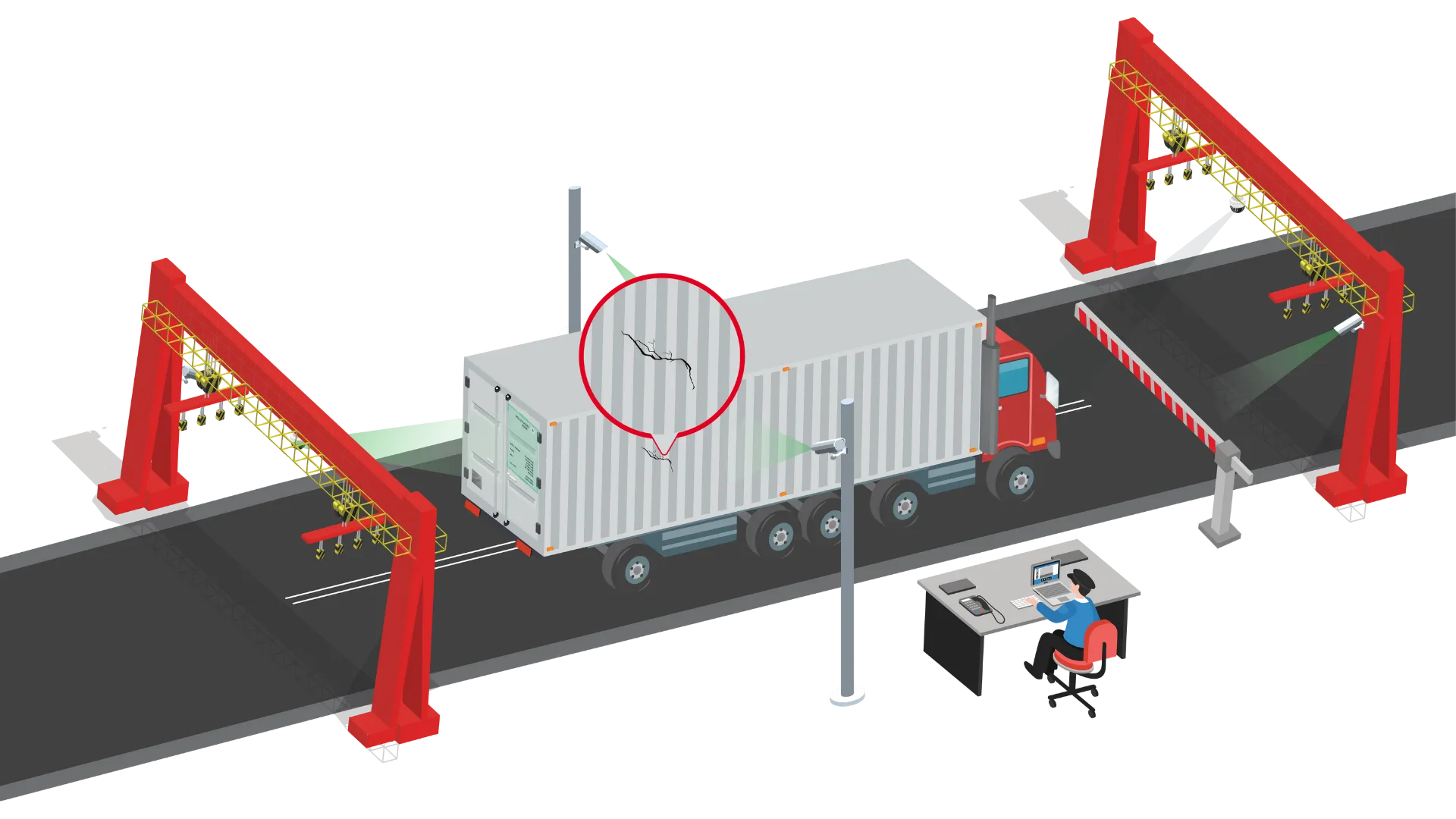

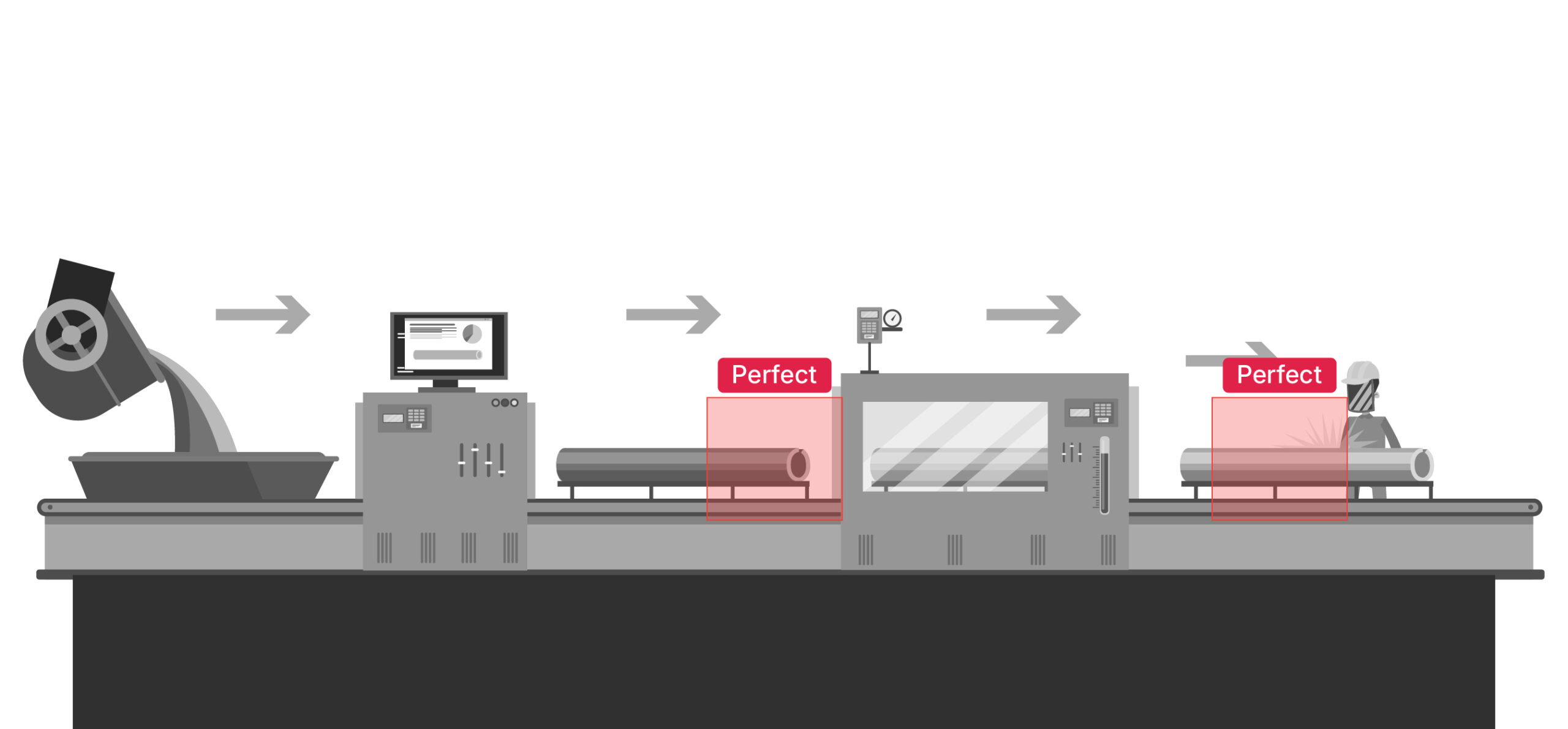

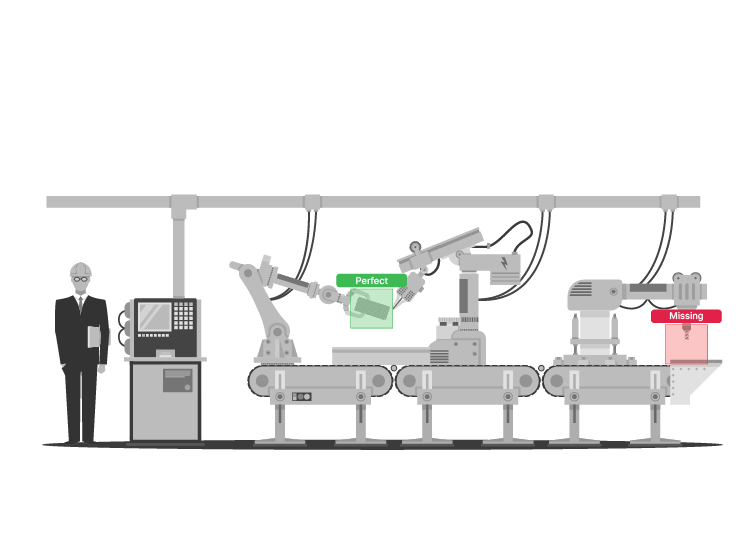

2. Container Damage Detection

Five-sided inspection (top, front, rear, both sides) detects rust, dents, holes and deformations. Each damage is mapped to the container ID, tagged by severity (minor, moderate, critical), and backed by photographic evidence.

- Benefits: No manual inspection | Faster gate operations | Verifiable records

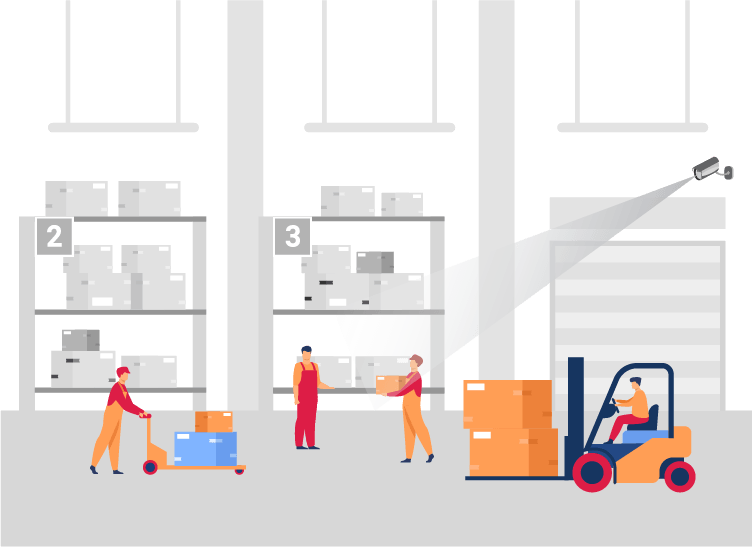

Yard Operations Monitoring

1. Container Geo-Location & Tracking

JACT tracks container movements using GPS, displays a live yard map, records journey and dwell time. It supports multiple equipments and triggers geofencing alerts for unauthorized movement.

- Benefits: Fewer misplacements | Faster container search | Better equipment planning

2. Vehicle Activity Tracking

Real-time visibility of vehicle status (active, idle, loading), utilization heatmaps, overspeed and idle alerts, and geofencing-based movement control.

- Benefits: Lower fuel consumption | Higher productivity | Reduced maintenance costs

How Gotilo Container works?

Turning observation into operational clarity

- Sees every Container :- Gotilo uses Vision AI and OCR to capture container IDs, condition, movement and location automatically, without manual intervention.

- Thinks in Real Time :- All gate and yard data flows into the Gotilo AI platform, where it is analysed instantly for visibility, alerts and operational insights.

- Connects the Ecosystem :- Gotilo integrates securely with TOS, YMS and port systems by enabling seamless data exchange across terminals, yards and logistics partners.

- Acts before Issues happen :- From damage detection to geofence violations and dwell delays, Gotilo flags risks early by helping teams act faster and smarter.

AI for Industry & Public Good

The India AI Impact Summit 2026, led by PM Modi, promotes responsible AI adoption. This is across industries that are emphasising efficiency, compliance and ethical standards.

Gotilo Container aligns with this vision is proving that AI can be both operationally powerful and trustworthy in real-world environments.

From the CEO’s Desk

While walking through… I saw the friction points. They were gate queues, manual checks and missing visibility. That’s where Gotilo Container steps in by turning every container and vehicle movement into real-time insight.

At CES & NRF 2026, seeing Gotilo in action reinforced that the future of AI is not complexity. It is the seamless presence of machines that see, analyze and act by empowering humans instead of adding steps.

With initiatives like India’s AI Impact Summit 2026, it is clear the world is aligning toward responsible and practical AI. For us, the mission is making every implementation intelligent, ethical and impactful from yards to retail floors.

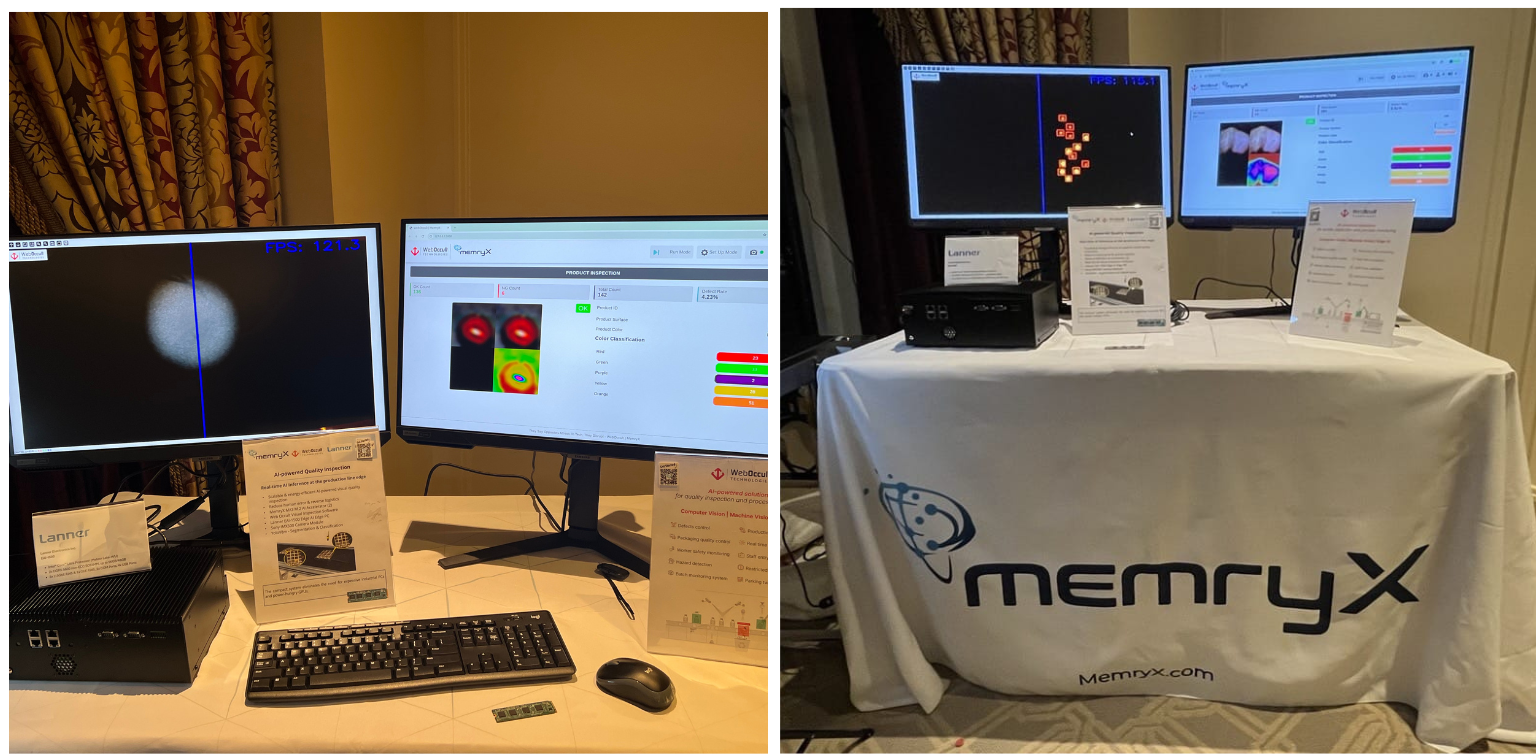

Exhibitions

Gotilo Spot spotted at NRF and CES

CES 2026 | Las Vegas, USA

There’s something unique about watching AI in a live environment. At CES, our deployment-ready Vision AI pipeline demonstrated how edge-optimised systems can deliver accuracy, speed and tangible business outcomes. From logistics to production floors, every demo highlighted that AI is no longer experimental. It is ready to perform in real-world operations.

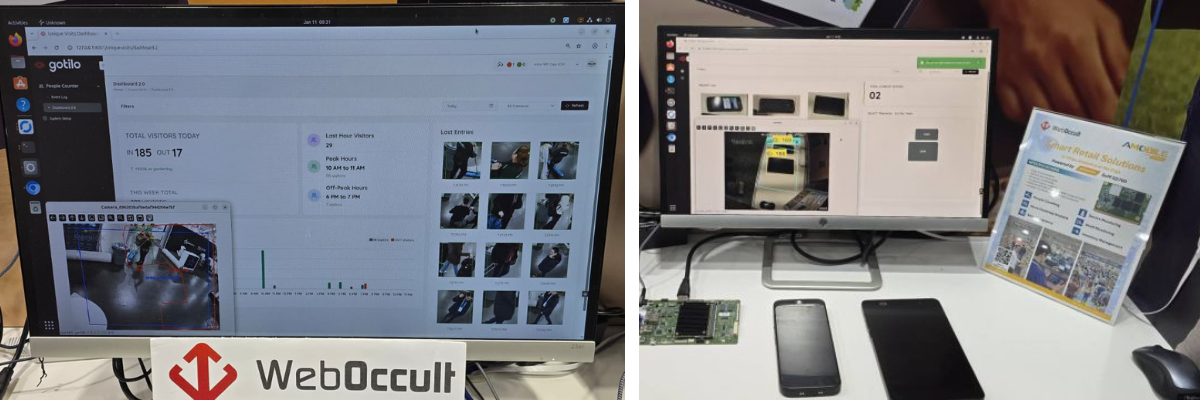

NRF 2026 | New York City, USA

At NRF, the focus was retail. Attendees explored Gotilo Spot live at our booth, seeing how Vision AI transforms everyday operations. From monitoring compliance to tracking footfall and hazards, WebOccult’s solutions showed that AI does not just observe… it enhances decision-making and efficiency on the spot.

When Vision Met the World, Twice in Japan

When Vision Met the World, Twice in Japan

Inside the Gotilo-verse

Inside the Gotilo-verse