NVIDIA Jetson Thor

Ruchir Kakkad

CEO & Co-founder

Powering the Next Era of Vision AI

Artificial Intelligence has moved from labs and data centers into the real world.

Today, cameras on highways are expected to analyze traffic, robots on factory floors make micro-second safety decisions, and drones survey farms with intelligence far beyond simple recording.

The challenge?

Edge devices have always been limited. They either lacked the raw horsepower to run advanced AI models, or they depended too much on cloud servers, which brought latency, bandwidth costs, and privacy concerns.

NVIDIAs new Jetson AGX Thor is designed to change that equation. With supercomputer-like performance in a compact module, Jetson Thor unlocks the ability to run heavy Vision AI workloads directly at the edge, where milliseconds matter most.

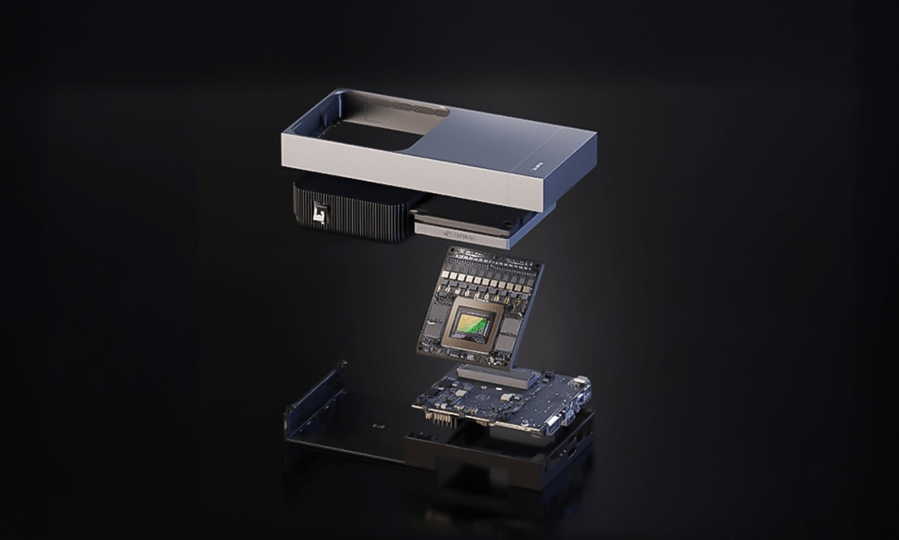

Jetson Thor is NVIDIAs most advanced embedded AI system yet, built on the Blackwell GPU architecture. It has been described as a supercomputer for robots and edge devices and not without reason.

At its core, Jetson Thor offers:

To put it simply, Jetson Thor brings data center power into a module small enough to fit into a drone, a robot, or an on-site server box.

The Jetson Orin series has powered many of todays smart cameras, robots, and edge AI systems. But compared to Orin, Thor is a giant leap forward.

This isnt just an upgrade, its a transformation. Where Orin could handle a handful of AI workloads at once, Thor can run multiple heavy models simultaneously, from video analytics to generative AI, without breaking a sweat.

Computer vision is one of the most demanding AI workloads. Every frame of a video contains millions of pixels, and with multiple cameras streaming simultaneously, the processing requirements skyrocket. Add to that the need for real-time responses, and you see why the edge has struggled.

Heres where Jetson Thor makes the difference:

Thor can decode and process multiple 4K and 8K video streams at once. This allows organizations to analyze dozens of cameras simultaneously, whether in a smart city or a large factory floor.

With Multi-Instance GPU, one Jetson Thor can run several AI models in parallel, each in its own isolated GPU partition. For example:

All in real time, all on one device.

Thors design delivers up to 3.5— better performance per watt compared to Orin. This makes it practical for non-stop systems like surveillance networks, drones, or autonomous machines that run on limited power.

Unlike previous Jetson modules, Thor can run transformer-based and vision-language models locally. That means systems dont just see but also describe and interpret what they see.

Imagine a surveillance system that not only flags person detected but generates a summary like: At 2:45 PM, an individual entered from the north gate and stayed near the exit for 10 minutes.

This fusion of vision and language is now possible, right at the edge.

Traffic cameras equipped with Jetson Thor can monitor congestion, detect violations, and adjust signals in real time. Airports can use it to scan runways with multiple feeds, detecting hazards instantly.

Factories can deploy Thor-powered systems for quality inspection. Multiple models can check for cracks, labeling errors, and worker safety in parallel, all running on one device.

A Thor-powered edge system can replace bulky video servers by analyzing feeds on-site. From face recognition to anomaly detection, everything happens locally, improving both speed and privacy.

Robots can fuse camera, LiDAR, and sensor data to navigate complex environments. Agricultural drones can detect crop health and weeds, making real-time decisions mid-flight, without relying on cloud connectivity.

Jetson Thor doesnt stand alone. Its part of NVIDIAs rich AI software ecosystem:

This means migrating from Jetson Orin to Thor is straightforward, applications can be optimized quickly to take advantage of Thors expanded capabilities.

The launch of NVIDIA Jetson Thor is more than a product release, its a milestone for Vision AI at the edge.

By combining massive compute power, multi-model scalability, and support for generative AI, Thor enables businesses to run smarter, faster, and more private AI systems than ever before.