WebOccult Insider | Oct 25

Ruchir Kakkad

CEO & Co-founder

When Vision Met the World, Twice in Japan

When Vision Met the World, Twice in JapanFrom reading precision to measuring presence, WebOccult | Gotilo brought Vision AI to life across two exhibitions in Japan.

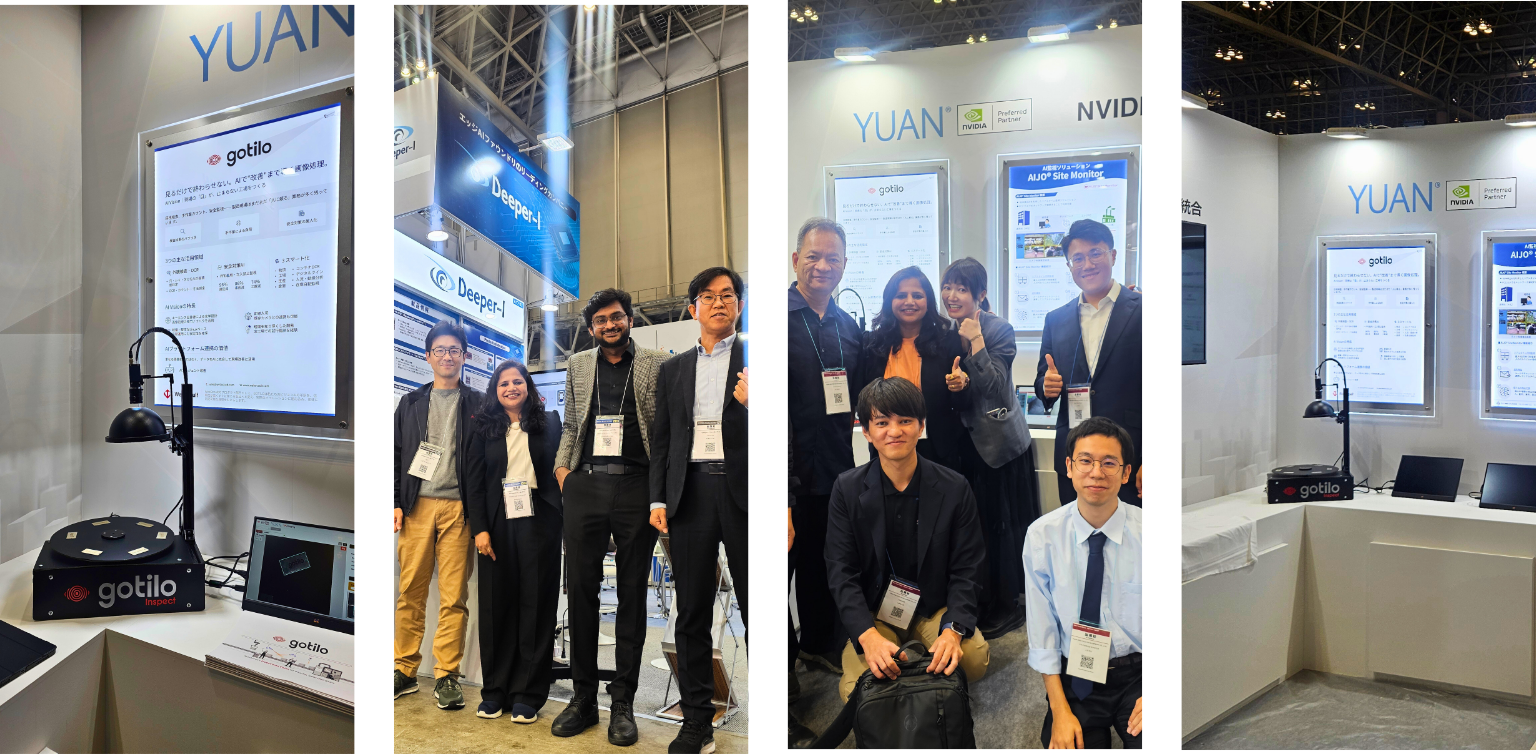

This month, WebOccult | Gotilo marked two milestones in Japan, each one reflecting a different side of Vision AI.

At NexTech Week Tokyo 2025, co-exhibiting with YUAN, the team unveiled the Plate Inspection and OCR model, a live system that reads, identifies, and verifies industrial plates with near-human accuracy. The model transformed recognition into understanding, showing how Vision AI can read beyond the surface to interpret meaning at scale.

Soon after, at Japan IT Week 2025, the team presented the Parking Occupancy and Dwell Time model, a live demonstration of edge-based visibility.

The system measured movement, observed dwell patterns, and visualized occupancy in real time, proving that clarity performs best when it stays close to action.

Across both exhibitions, one message stood out: the future of Vision AI lies not in simulation, but in presence, in technologies that see, decide, and deliver in the moment they are needed.

As October ends, we extend our warm wishes for Diwali and Halloween to our clients, partners, and global collaborators.

The team now looks ahead to Embedded World USA 2025, set for the first week of November, ready to bring Vision AI closer to the world once again.

There is something rare about standing beside a system you’ve built and watching it work, not in a controlled lab, but in the world it was meant for.

That feeling returned twice this month, both times in Japan.

At NexTech Week Tokyo, we stood among people who saw the Gotilo Inspect Plate Inspection and OCR model in action, a camera that doesn’t just capture light, but learns to read it. Weeks later, at Japan IT Week, another crowd gathered around the Parking Occupancy and Dwell Time model, where movement turned into measurable rhythm.

Both moments carried the same silence before understanding, the quiet pause when technology becomes self-explanatory.

Exhibitions are often about scale, but what stays with me are the small conversations: an engineer tracing lines on a demo screen, a student asking how machines learn to notice what we overlook. Those are the moments that define progress.

Now, as we prepare for Embedded World USA 2025 in Anaheim, California, I think of this as a continuation, not a departure. The models we carry have changed, the geography has shifted, but the idea remains constant, that vision, when designed with intention, should travel as easily as light does.

If innovation is a journey, then every frame we process is a step forward, quiet, deliberate, and bright enough to see what comes next.

USA | Embedded World

(4-6 November, 2025)

Co-exhibiting with YUAN

USA | Embedded World

(4-6 November, 2025)

Co-exhibiting with Beacon Embedded + MemryX

Every city tells its story through movement, in how people travel, where they pause, and how long they stay.

For decades, this rhythm of arrival and waiting has existed without measurement. We’ve counted vehicles, not behavior; space, not time.

AI Vision technology changes that conversation. It gives parking systems a new vocabulary, one built on visibility, not assumption. Cameras no longer just record; they interpret. Each frame becomes a record of how spaces breathe, how patterns form, and how decisions can evolve with precision.

The future of parking management lies in this quiet intelligence. When every slot can speak for itself, the city begins to answer more complex questions: How efficiently are we using our shared spaces? What patterns of movement define our productivity? How do we reduce idle time without building more infrastructure?

AI Vision doesn’t replace human understanding; it extends it. It turns invisible pauses into measurable opportunity, a new kind of data that designs better cities, smoother logistics, and sustainable economies.

Space will always be limited.

Time will always move forward.

The value lies in how clearly we can see both.

Inside the Gotilo-verse

Inside the Gotilo-verseEvery few decades, an idea reshapes how industries perceive themselves.

Not through disruption, but through understanding.

The Gotilo-verse was born from such an idea, the belief that visibility can become the foundation of intelligence. It isn’t a platform or a product line; it’s an evolving world of AI Vision systems that learn, adapt, and translate visual reality into measurable logic.

For years, technology has promised automation. But automation alone is blind. It performs without context. The Gotilo-verse introduces a different kind of intelligence, one that watches first, then decides. It gives sight to environments that were once silent: a factory floor, a shipping dock, a parking structure, a warehouse aisle.

In this ecosystem, each solution becomes a living node of awareness. A camera at a manufacturing line understands quality in motion, noticing surface flaws invisible to the human eye. A vision system in a logistics yard identifies containers, tracks dwell time, and improves throughput without requiring new sensors. Retail outlets study shelf stock and foot traffic in real time. Farms analyze plant growth by light reflection and leaf pattern.

Every setting becomes a new dimension of the Gotilo-verse, distinct in purpose, connected by vision.

What makes this universe remarkable isn’t its scale, but its sensitivity. It’s the ability to think where the work happens, not in distant servers, but at the edge. Each frame processed becomes a decision made locally, instantly, and intelligently.

For emerging markets, this shift is transformational. They no longer need to choose between affordability and sophistication. Vision AI offers both, precision that scales without excessive infrastructure, insight that grows without complexity.

The Gotilo-verse, in essence, is not about building smarter machines. It’s about creating calmer ones, systems that observe carefully, decide wisely, and act only when needed.

Because the future of technology will not be defined by how fast it reacts, but by how deeply it understands.

And that understanding always begins with seeing clearly.

This month, we spoke of vision in action, from Japan’s exhibition floors to the growing landscape of the Gotilo-verse, where AI is learning not just to detect, but to understand. We explored precision, patience, and the quiet intelligence that defines our future.

As November begins, we carry these ideas forward to Anaheim, ready to turn insight into impact once again.