Alignment And Overlay Accuracy

Nanometer Precision. Zero Overlay Errors. Layered Perfection Starts Here.

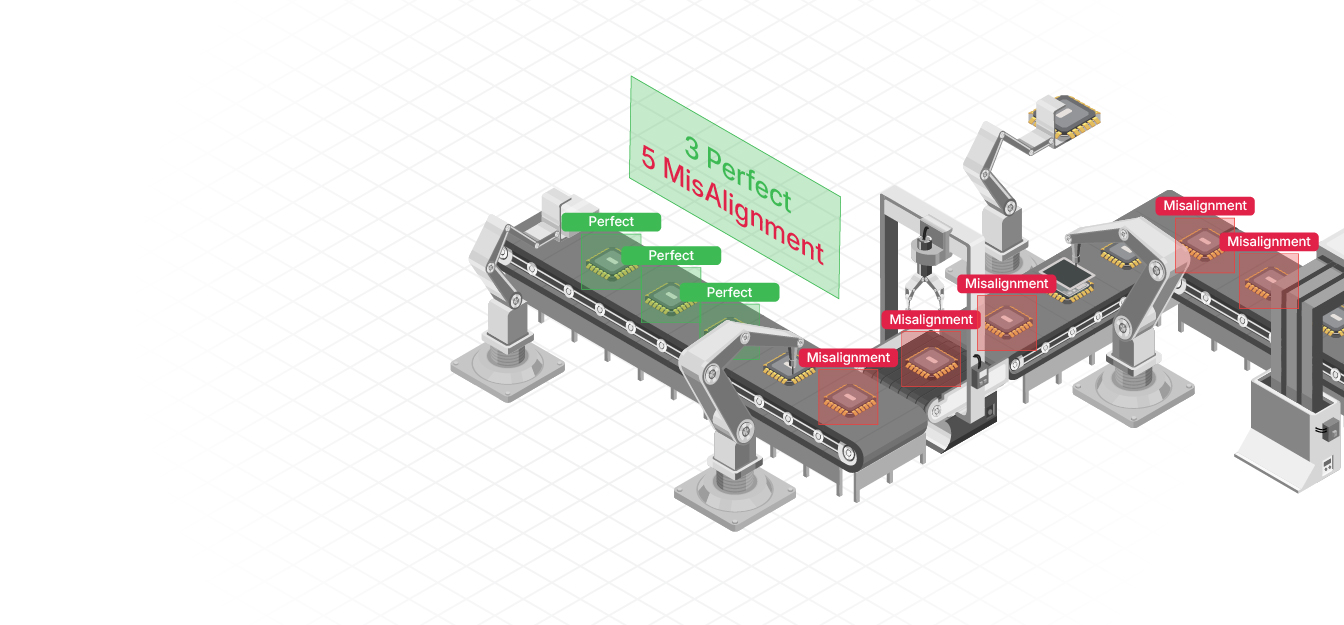

In semiconductor manufacturing, the success of multi-layer lithography depends on one thing: precision overlay. A nanometer-scale misalignment can trigger a cascade of failures, shorts, opens, or pattern mismatch. Traditional overlay systems, though reliable, are static and can miss real-time deviations.

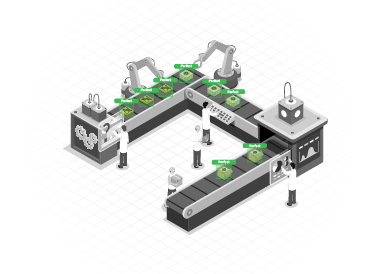

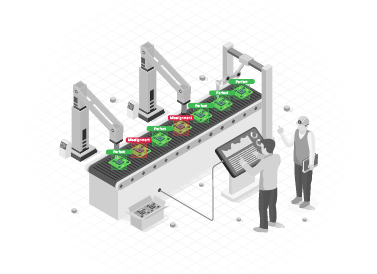

AI-powered Alignment and Overlay Accuracy solutions use real-time computer vision to detect and correct misalignment during lithography. By continuously comparing new patterns with reference layers, AI vision ensures every overlay falls within strict tolerances, even on advanced nodes.

This reduces overlay-related yield loss, cuts rework cycles, and ensures multi-patterning integrity.

At advanced nodes (5nm/3nm), even tiny overlay shifts can cause electrical shorts or performance drift.

Thermal expansion or tool wear causes gradual shifts that traditional static calibration can’t catch.

Without real-time feedback, overlay errors often go unnoticed until after etching or metrology.

Multiple exposure passes increase the chance of cumulative misalignment, especially on dense layers.

Computer vision verifies overlay precision during exposure, not just in post-processing.

AI models trained to identify and measure overlay deviation at single-digit nanometer tolerances.

Learns and predicts overlay drift based on historical tool movement, exposure conditions, and material response.

Feeds deviation data into stepper/aligner systems for automatic compensation and dynamic realignment.

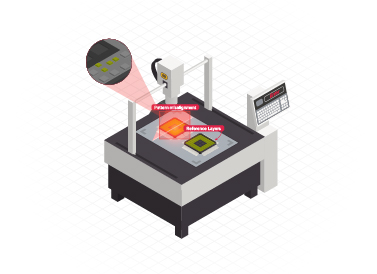

We begin by integrating CAD-based reference layers or GDSII data to set visual baselines for overlay comparison.

Specialized cameras with nanometer resolution are installed near lithography tools. Calibration includes pixel-level correction and motion compensation for fast-moving wafer stages.

Using archived mismatch incidents, our AI is trained to distinguish between acceptable variation and true overlay faults across complex geometries and resist layers.

As patterns are exposed, real-time visual feedback is compared to reference alignment. Detected deviation values are immediately sent to the scanner or aligner system for correction during the same pass.

Stay updated with the trending and most impactful tech insights. Check out the expert analyses, real-world applications, and forward-thinking ideas that shape the future of AI Computer Vision and innovation.

7:45AM at a busy automotive parts manufacturer. A delivery truck arrived at the dock. Usually, supervisors would take 10 minutes to scan paperwork. They’d also be keying in data. Instead, a camera quietly recorded the container ID, logged upon its arrival. And it tagged the shipment to a specific production line. All without a single […]

CEO & Co-founder

It’s 2:14 PM at a busy CFS gate. A trailer rolls in. The container is old, dusty and sun faded. The number is readable, but only if you look closely. The driver wants to move fast. The queue behind him is growing. The gate operator does what they have always done. Reads the container number. […]

CEO & Co-founder

Modern logistics depend on speed. Accuracy and visibility keep logistics moving. Across container yards and CFS hubs… teams still face blind spots. They rely on manual checks and delayed data. This leads to missed damages and inefficient movement of assets. Logistics teams can see clearly by combining cameras with computer vision. They can understand and […]

CEO & Co-founder